SEO used to be a tidy equation: rank → click → conversion.

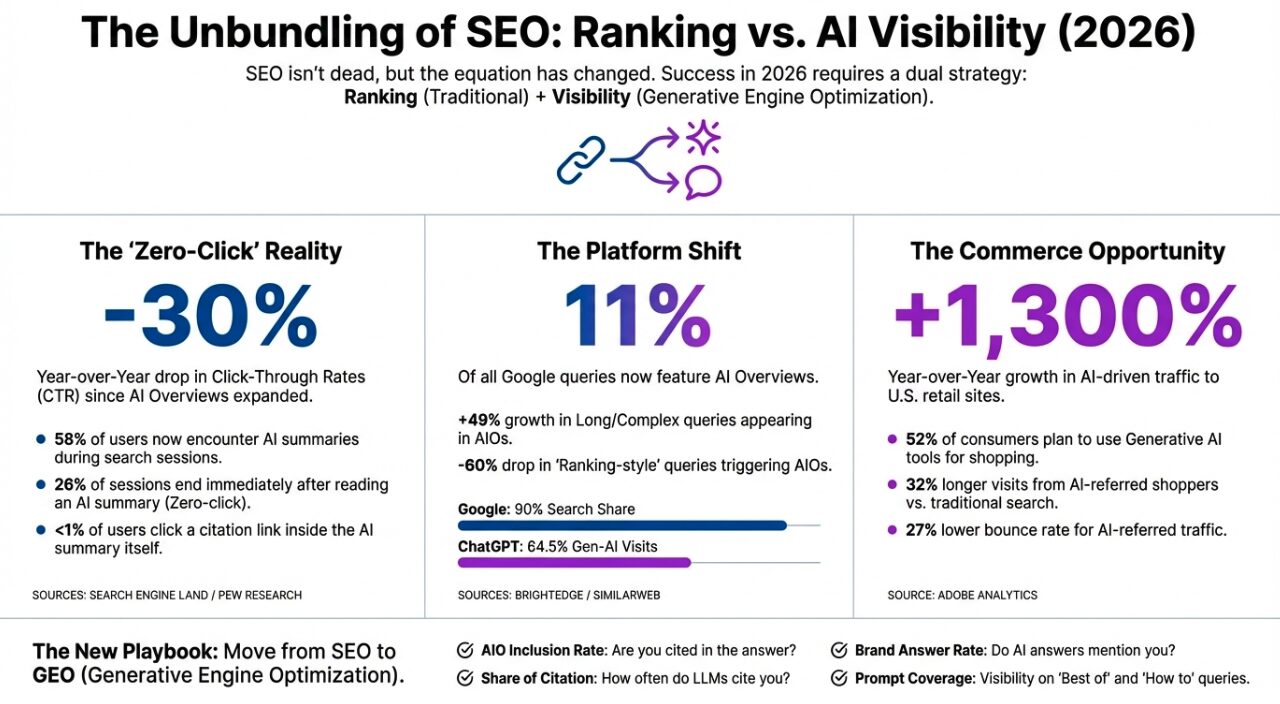

In 2026, the equation has more steps—and fewer guarantees. Google increasingly answers queries directly. Chat-style search is siphoning intent into closed interfaces. And brands are waking up to a new scoreboard: AI/LLM visibility (whether you’re mentioned and cited in AI answers).

Here are 61+ stats & reports that capture the shift.

The search results page is becoming the product

Search is no longer just a directory—it’s increasingly an answer interface. As AI Overviews expand, the battle shifts from “winning a blue link” to being included in the synthesized response that sits above everything else. That changes how brands measure success: visibility and citation placement become as important as rank.

1) AI Overviews (AIOs) reached their “one-year” milestone on May 14, 2024. (BrightEdge)

2) AIOs now appear in 11%+ of Google queries. (BrightEdge)

3) That’s a ~22% increase in AIO presence since debut. (BrightEdge)

4) Total search impressions increased 49%+ in BrightEdge tracking since AIO launch. (BrightEdge)

5) Click-throughs declined nearly 30% since May 2024 in the same BrightEdge analysis. (BrightEdge)

6) Search Engine Land summarized that as a ~30% year-over-year CTR drop alongside AIO expansion. (Search Engine Land)

7) Longer, complex queries shown in AIOs grew ~49% since May 2024. (BrightEdge)

8) “Ranking-style” queries in AIOs fell ~60%. (BrightEdge)

9) Comparison queries in AIOs fell ~14%. (BrightEdge)

10) The industries with the strongest AIO presence include Healthcare, Education, B2B Tech, and Insurance. (BrightEdge)

Pew’s dataset makes the “zero-click” story painfully concrete

A lot of AI SEO talk is anecdotal—Pew brings receipts. Their behavioral dataset shows exactly how user clicks change when an AI summary appears, and how often people simply stop browsing after getting an answer. For marketers, this is the clearest evidence that traffic is being structurally compressed, not temporarily “down.”

11) Pew’s analysis used browsing data from 900 U.S. adults (March 1–31, 2025). (Pew Research Center)

12) Pew analyzed 68,879 unique Google searches. (Pew Research Center)

13) 12,593 of those searches produced an AI summary. (Pew Research Center)

14) In Pew’s sample, 18% of Google searches produced an AI summary. (Pew Research Center)

15) 58% of respondents conducted at least one search that produced an AI summary in March 2025. (Pew Research Center)

16) When an AI summary appeared, users clicked a traditional result link in 8% of visits. (Pew Research Center)

17) Without an AI summary, users clicked a traditional result link in 15% of visits. (Pew Research Center)

18) Users clicked a link in the AI summary itself only 1% of the time. (Pew Research Center)

19) Users ended their browsing session after a page with an AI summary 26% of the time. (Pew Research Center)

20) Users ended their browsing session after a page without an AI summary 16% of the time. (Pew Research Center)

21) In Pew’s study, the median AI summary was 67 words. (Pew Research Center)

22) AI summaries ranged from 7 to 369 words. (Pew Research Center)

23) 88% of AI summaries cited three or more sources. (Pew Research Center)

24) Only 1% of AI summaries cited a single source. (Pew Research Center)

25) Just 8% of one- or two-word searches produced an AI summary. (Pew Research Center)

26) 53% of searches with 10+ words produced an AI summary. (Pew Research Center)

27) 60% of searches beginning with question words (“who,” “what,” “when,” “why,” etc.) produced an AI summary. (Pew Research Center)

28) 36% of searches that used full sentences (noun + verb) produced an AI summary. (Pew Research Center)

29) Wikipedia, YouTube, and Reddit were the most frequently cited sources across AI summaries and standard results in Pew’s analysis. (Pew Research Center)

30) Those three sources accounted for 15% of sources in AI summaries (and 17% in standard results). (Pew Research Center)

31) .gov sources were linked more often in AI summaries (6%) than standard results (2%). (Pew Research Center)

Zero-click isn’t a vibe—it’s measurable behavior at scale

Clickstream studies help quantify what most teams are already feeling in analytics: fewer searches turn into visits. When only a fraction of searches produce an open-web click, classic SEO KPIs (sessions, CTR) become lagging indicators. The strategic implication is simple: you need visibility metrics that capture influence without a click.

32) For every 1,000 Google searches in the U.S., only 360 clicks go to the open web (clickstream-based estimate). (SparkToro)

33) For every 1,000 Google searches in the EU, only 374 clicks go to the open web. (SparkToro)

34) Bain estimates organic web traffic is down ~15% to 25% as zero-click usage rises. (Bain)

35) Bain reports ~80% of consumers rely on AI/zero-click results for at least 40% of their searches. (Bain)

LLM visibility: citations are consolidating, referrals are thinning

Chatbots can mention you without sending you traffic—and increasingly, they do. Citation share is concentrating among a small set of “trusted” domains, while outbound referrals fluctuate or decline. Brands that don’t track mentions and citations risk losing mindshare even if their traditional rankings look fine.

36) Referral traffic from ChatGPT to websites fell 52% since July 21, 2025 in an analysis of 1B+ citations and 1M+ referral visits. (Search Engine Land)

37) Reddit citations rose 87% since July 23, 2025, reaching 10%+ of ChatGPT citations. (Search Engine Land)

38) Wikipedia citations rose 62% from its July low, reaching nearly 13% citation share. (Search Engine Land)

39) Wikipedia, Reddit, and TechRadar accounted for 22% of all citations in that dataset. (Search Engine Land)

40) Those top three sites’ citation share grew 53% in a month. (Search Engine Land)

The market is still Google-first—but the “AI layer” is a new battleground

Google still dominates distribution, but discovery is splintering into multiple AI surfaces: AIOs, chat assistants, and standalone answer engines. That means your content strategy has to travel well—structured, attributable, and easy to quote. The winners won’t just rank; they’ll get picked.

41) Google maintains 90%+ search market share, even as AI discovery expands. (BrightEdge)

42) ChatGPT grew 21% in a month in BrightEdge’s snapshot of AI discovery platforms. (BrightEdge)

43) BrightEdge describes Perplexity and Gemini as roughly 1/10 the size of ChatGPT (in that snapshot). (BrightEdge)

44) Similarweb’s January 2026 Global AI Tracker: ChatGPT held ~64.5% of gen-AI chatbot site visits. (Search Engine Journal)

45) Similarweb’s January 2026 Global AI Tracker: Gemini reached ~21.5% of gen-AI chatbot site visits. (Search Engine Journal)

Commerce is following attention into AI

Where shoppers research, revenue follows. The early data suggests generative AI is moving beyond novelty into a meaningful referral and decision-support channel—especially in retail. For SEO leaders, this is the clearest argument that AI visibility isn’t just brand-building; it’s pipeline.

46) AI-driven traffic to U.S. retail sites rose 1,300% YoY (Nov 1–Dec 31, 2024). (Adobe)

47) AI-driven traffic was up 1,950% YoY on Cyber Monday 2024. (Adobe)

48) By July 2025, Adobe reported AI-driven retail traffic up 4,700% YoY. (Adobe)

49) AI-driven traffic was up 1,100% in January 2025 vs. a July 2024 baseline. (Adobe)

50) AI-driven traffic was up 3,100% in April 2025 vs. that same July 2024 baseline. (Adobe)

51) Adobe’s consumer survey: 38% have used generative AI for online shopping. (Adobe)

52) Adobe’s consumer survey: 52% plan to use generative AI for shopping this year. (Adobe)

53) Shoppers arriving from generative AI sources were 10% more engaged than non-AI sources, per Adobe. (Adobe)

54) Adobe reported 32% longer visits for AI-referred shoppers. (Adobe)

55) Adobe reported a 27% lower bounce rate for AI-referred shoppers. (Adobe)

GEO (Generative Engine Optimization) is moving from theory to practice

GEO is the emerging discipline of optimizing for inclusion in generative answers, not just ranking in lists. The research is valuable because it treats AI visibility as measurable—and therefore improvable—through changes to content structure, evidence, and clarity. In other words, there’s a playbook forming, and it looks different from classic SEO.

56) Researchers introduced “Generative Engine Optimization (GEO)” as a framework for improving visibility inside generative engines. (arXiv)

57) GEO-bench includes 10,000 queries for evaluation. (arXiv)

58) The paper reports GEO can boost visibility by up to 40% in generative engine responses. (arXiv)

59) The paper highlights that GEO effectiveness varies by domain, pointing to the need for vertical-specific tactics. (arXiv)

The publisher problem is no longer theoretical

Publishers are the canary in the coal mine: when platforms answer questions directly, the sites that used to monetize those answers feel it first. Their projections highlight a broader risk for any content-driven business model—relying on search as a guaranteed distribution channel is becoming a fragile assumption.

60) Reuters Institute: publishers expect search traffic to decline ~43% over the next three years. (Reuters Institute)

61) Search Engine Land summarized the same report: news executives expect search referrals to drop 40%+ over three years. (Search Engine Land)

What these stats mean

The takeaway isn’t “SEO is dead.” It’s that SEO is being unbundled into two jobs:

- Ranking (traditional SEO) — still necessary.

- Being selected and cited inside AI answers (GEO / AI visibility) — increasingly decisive.

If you want a clean KPI set for 2026, track:

- AIO inclusion rate (are you cited in AI Overviews for priority queries?)

- LLM citation share (how often ChatGPT/Perplexity/Gemini cite you vs. competitors)

- Brand answer rate (do AI answers mention you when you’re relevant?)

- Prompt coverage (how often you appear for “best,” “vs,” “how to choose,” troubleshooting, and “alternatives to” queries)