Google AI Overviews have quietly broken the mental model most teams still use to evaluate organic performance. Rankings are stable. Impressions look fine. Clicks are down. And leadership wants to know why SEO is no longer driving the same returns.

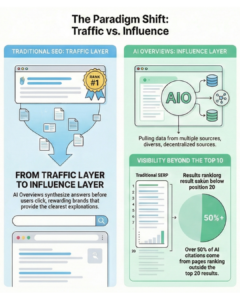

The uncomfortable truth is that search has shifted from a traffic layer to an influence layer. Google AI Overviews now synthesize answers before users ever reach your page. And the brands winning visibility inside those answers are not always the ones ranking number one or even on page one.

After analyzing more than 100 AI Overviews across SaaS and B2B categories, one pattern is clear: AI visibility rewards clarity, structure, and extractability more than raw ranking position. Below are five concrete ways brands are consistently appearing in Google AI Overviews today and how growth teams can engineer for this reality without rebuilding their entire SEO stack.

1. They front-load explanations within the first 150 words

Brands that appear in Google AI Overviews almost always explain the topic immediately. Not after a brand story. Not after social proof. Right away.

This matters because large language models ingest content in semantic chunks, typically between 150 and 300 words. In our review of AI Overviews citations, the referenced explanation almost always came from the first content block on the page, not from sections further down.

Pages that open with clear definitions, context, and “why this matters” language are far easier for Google to extract confidently. This is why glossary pages and beginner explainers continue to outperform product-led landing pages in AI visibility, even when those product pages rank higher.

If your introduction is optimized for persuasion instead of comprehension, you are invisible to AI by default.

2. They write in answer-ready blocks, not narrative essays

AI Overviews do not quote paragraphs the way humans read them. They assemble answers from discrete, self-contained ideas.

Brands appearing consistently structure their content into short, declarative sections that mirror how people ask questions conversationally. Each block answers one thing clearly before moving on.

This is not about chasing featured snippets. It is about reducing cognitive load for machines. When your content already resembles an answer, Google does less synthesis work and is more likely to trust it.

In our analysis, pages cited in AI Overviews averaged:

- 2–4 sentence paragraphs

- One clear idea per block

- Minimal metaphor or narrative buildup

Readable for humans. Extractable for machines.

3. They earn inclusion through trust signals, not domain authority alone

One of the most counterintuitive findings from AI Overviews analysis is how often citations come from domains ranking outside the top 10.

In our SaaS-focused sample, over half of AI Overview citations came from pages ranking beyond the top 20 organic results. What they had in common was not link volume. It was credibility density.

Pages referenced reputable data sources, clearly stated assumptions, and avoided exaggerated claims. Google appears far more conservative when selecting sources for AI summaries than for traditional rankings.

This mirrors what we see in authority-building campaigns. For example, Relevance helped Michael Bungay Stanier secure expert placements in Forbes, Inc., and NASDAQ, which later surfaced as validation signals in AI-driven summaries around workplace relationships. The visibility came from trust reinforcement, not ranking dominance.

If your content sounds like marketing, AI treats it like marketing.

4. They solve narrow use cases instead of broad category definitions

Broad category pages struggle in AI Overviews because they try to do too much. Brands that appear consistently focus on specific scenarios with clear intent.

Instead of “What is customer segmentation,” AI prefers:

- Customer segmentation for B2B SaaS

- Customer segmentation for PLG onboarding

- Customer segmentation for retention modeling

These narrower pages give Google something concrete to synthesize. They also align better with how people phrase exploratory queries in AI-first search experiences.

For lean teams, this is good news. You do not need to win the head term. You need to own the clearest explanation of a specific problem your audience actually has.

5. They demonstrate topical depth across clusters, not isolated pages

AI Overviews rarely rely on one-off content. Brands that appear repeatedly tend to publish clusters of reinforcing content around a topic.

When Google sees the same brand explaining adjacent questions accurately across multiple pages, confidence increases. Over time, that brand becomes a default reference point even if no single page ranks first.

This is why pillar-and-cluster strategies matter more now than ever. Not for internal linking alone, but for AI trust accumulation. Each supporting page acts as corroboration that your brand understands the topic holistically.

Topical depth is no longer just an SEO concept. It is an AI eligibility requirement.

What This Means for SEO Teams in an AI-First SERP

Google AI Overviews are not eliminating organic opportunity. They are changing who gets rewarded for clarity and trust.

If your traffic is declining despite stable rankings, the issue is likely not relevance. It is extractability. Brands winning AI visibility are optimizing for how machines understand content, not just how humans consume it.

The teams that adapt fastest will stop chasing position one and start engineering for inclusion. In an AI-mediated search landscape, being the best explanation beats being the highest result.