You already track rankings, ROAS, CAC, maybe even incrementally. But if you are honest, you probably have no structured way to answer a very 2025 question: “Where do AI systems actually see and surface my brand?” AI Overviews, answer engines, chatbots and assistants are quietly becoming a discovery layer that sits above classic search. If you are not measuring that layer, your reporting is missing a growing share of reality.

The good news: AI visibility is trackable enough today to be useful, even if the data is imperfect. With a bit of instrumentation, a handful of tools, and one shared scorecard, you can start treating AI visibility as a performance channel instead of a random bonus.

Here are six ways teams are already tracking it.

1. Start with a page-level analysis

Before you chase AI Overviews and answer engines, you need to know if your pages are machine friendly in the first place. Aaron’s AI Visibility Extension (my free extension) gives you a fast diagnostic for that. It scores pages on an AI Visibility Score from 0 to 100, audits schema and structured data, inspects meta tags and flags readability issues like excessive passive voice.

In practice, this becomes your “AI technical SEO” baseline. High scoring pages are more likely to be correctly parsed, understood and reused by AI systems. One B2B SaaS team I worked with ran the extension across their top 100 URLs, fixed broken schema on 30 of them and simplified copy on key feature pages. Over the next quarter, they saw those URLs appear far more often in AI summarizations of their category and noticed a noticable lift in organic demo requests from non branded queries.

2. Monitor your presence in AI search surfaces

AI Overviews and generative search panels are the new “position zero” for many categories. You cannot control them, but you can absolutely track your presence. The workflow is not glamorous: build a query set, check it on a schedule and log when you appear in an AI panel, citation block or inline link.

High performing teams treat this almost like early rank tracking. One ecommerce brand I know tracks 50 core queries across Google AI Overviews and Bing Copilot. Every two weeks they record: whether an AI panel shows, whether their brand is cited, and how often competitors appear instead. Over three months they used this log to justify investing in deeper educational content that targeted entity relationships and common follow up questions. The payoff was a 24 percent increase in non branded revenue from search, with a meaningful share coming from queries where AI Overviews had previously excluded them.

You can even tag these queries in your existing SEO platforms (Ahrefs, Semrush, STAT, etc.) and keep a quick Loom or screenshot library of what AI panels showed each month, so you have a visual history when someone asks “when did we start showing up there?”

You do not need perfect coverage. What matters is directional visibility: are you showing up more often in AI search surfaces than last quarter or less.

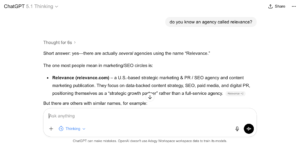

3. Track brand and competitor mentions in AI answer engines

Even if AI search panels feel opaque, AI answer engines are surprisingly trackable. ChatGPT, Perplexity and similar tools return conversational answers you can systematically test. The key is to standardize prompts and log outcomes so you can see trends instead of anecdotes.

Set up a simple spreadsheet (or an Airtable base if you want nicer reporting) with a dozen prompts that reflect real user behavior, such as “best [your category] tools for [segment]” or “alternatives to [competitor].” Run these prompts across major answer engines each month and record whether your brand appears, in what context, and which competitors dominate when you do not. Teams that take this seriously start to notice patterns, such as “we dominate prompts that mention integrations but lose when prompts emphasize pricing.” That insight directly informs your content roadmap and positioning tests.

A mid market automation tool agency I worked with found they were invisible in “Zapier alternatives” prompts but consistently mentioned in “workflow automation tools for developers.” That nuance helped them narrow their positioning rather than chase a losing battle.

Example prompts to track regularly:

- “Best [category] platforms for small teams”

- “Top [category] tools under [budget]”

- “Alternatives to [competitor] for [use case]”

If you want to go one step further, you can visualize the month-over-month changes in a simple Looker Studio or Data Studio dashboard pulling from your sheet, so leadership can see the share of prompts where you’re present versus absent.

4. Instrument your analytics for AI influenced traffic

You will not always get a clean referrer from AI tools, but there are enough hints to be worth tracking. Start by grouping any sessions coming from domains like chat.openai.com, bard.google.com, perplexity.ai or similar into a “AI assistants” channel where possible in GA4. Expect this to be noisy and incomplete, but directionally useful.

Then combine that with self reported attribution. Update your post purchase or lead forms to add options like “Asked an AI assistant” and “Found you in AI search results.” When one DTC brand added this to their checkout survey via a tool like Fairing or KnoCommerce, within two months 11 percent of new customers selected AI related discovery. The referrer data alone never would have revealed that.

Over time, correlate these segments with performance in GA4 or your BI tool (Mode, Looker, Tableau). Are AI influenced visitors converting at similar rates to organic search? Are their cohorts more or less valuable by 90 day LTV? This is where AI visibility starts to connect directly into your CAC and LTV conversations instead of living as an abstract narrative.

Useful survey options to add:

- “Searched on Google or Bing”

- “Asked an AI assistant like ChatGPT”

- “Saw you in an AI powered search result”

5. Track entity and schema health across your content

AI systems think in entities and relationships more than keywords. If your brand, products and core concepts are not modeled cleanly, you will struggle to show up in AI generated recaps, comparison lists and how to answers. Aaron’s extension already helps you inspect schema, headings and meta tags on a page by page basis.

Take that further by building a simple entity map for your brand. List your primary entities (brand, products, key features, core problems you solve) and ensure you have structured data, internal linking and clear copy that consistently reinforce those across your site. One practical approach is to audit your top 50 URLs and mark whether each entity is clearly represented and linked to. A crawler like Screaming Frog or Sitebulb can help you pull all those pages and internal links into a single view so you’re not doing it URL by URL.

A growth team at a PLG collaboration tool did exactly this and realized that their flagship feature was described five different ways with inconsistent naming. After unifying the naming, updating schema and cleaning up internal links, they saw that feature mentioned by name in far more AI generated “how to collaborate asynchronously” answers. The changes did not spike traffic overnight, but they improved the brand’s semantic footprint in ways that are hard to win later.

6. Build an internal AI visibility scorecard

Individual tools and audits are helpful, but leadership cares about trendlines. To make AI visibility a real KPI, bundle the signals you collect into an internal scorecard that you can review monthly. Treat it like early stage attribution: imperfect but directionally powerful.

A simple model could weight three buckets: AI technical health, AI presence and AI influenced outcomes. For each bucket, define 2 or 3 metrics you already track and rate them on a 1 to 5 scale. Then average them into a single AI Visibility Index you show alongside organic and paid performance. You can manage this in a humble Google Sheet, or pipe it into whatever you already use for reporting—Looker, PowerBI, Mode, even a Notion dashboard.

For example:

| Signal bucket | Example metrics |

|---|---|

| AI technical health | Avg Aaron score on top URLs, schema coverage |

| AI presence | Share of queries where you appear in AI panels |

| AI influenced outcomes | Conversions and LTV from AI influenced traffic |

The goal is not scientific precision. It is to move your organization from “AI visibility is random” to “we have a metric we can improve quarter over quarter.” Once that mindset shifts, prioritizing AI friendly content, schema fixes and experimentation with AI search surfaces becomes much easier to defend in planning meetings.

Closing

AI visibility will never be as cleanly measurable as last click conversion tracking, at least not soon. But you do not need perfect data to make smarter decisions. With free extensions like my free AI Visibility extension as your baseline, a lightweight monitoring cadence for AI search and answer engines, and a simple scorecard that ties it all back to revenue, you can treat AI visibility like any emerging channel. Start small, commit to a consistent measurement ritual, layer it into the tools you already use, and your future self will thank you when stakeholders ask “how visible are we in AI?” and you already have an answer backed by data.