You know the feeling.

You publish the guide. You ship the landing page. You finally get that “best of” mention. And then your CEO forwards you a screenshot from ChatGPT, Perplexity, or Google AI Overviews asking why your competitor is the recommended answer for your category when you’re the one actually winning deals.

That’s AI visibility. It’s not vibes. It’s not a rebrand of SEO. It’s a new set of platforms where the “result” is a synthesized answer, and your job is to be included, represented correctly, and chosen.

Our research and experience

At Relevance, we sit in the messy middle where growth teams actually live: you need pipeline now, you still have to hit CAC targets, and you’re watching organic traffic get swallowed by zero click experiences. We’ve been building SEO, digital PR, and distribution systems long enough to recognize the pattern: when the interface changes, the winners are the teams that treat visibility like a portfolio, not a channel.

AI visibility is exactly that kind of interface shift. Google is explicit that AI features like AI Overviews and AI Mode still rely on the fundamentals of search and site quality, even as presentation changes. What’s new is where influence happens and what “winning” looks like when the answer shows up before the click.

To ground this guide in more than our own opinions, we reviewed the latest platform documentation and credible studies on how AI answer experiences affect clicks and sourcing. Pew’s analysis found that users are less likely to click links when an AI summary appears. Ahrefs has also documented fast growth in AI Overviews over time, which matters because frequency changes strategy.

The punchline from all of that research is simple: you’re not optimizing for ten blue links anymore. You’re optimizing to become the material the answer is made from.

What this guide covers

We’ll define AI visibility in plain language, then break down how it differs from SEO, PR, and the newer acronym soup (GEO, AEO, LLMO). From there, we’ll walk through the core pillars that actually move the needle, the tactical plays we’ve seen work across industries, and a realistic implementation roadmap you can run with a lean team. We’ll also cover measurement, because if you can’t explain progress to leadership, this dies as a “2025 experiment” and never becomes a durable advantage.

What AI visibility actually is

AI visibility is your brand’s ability to show up, accurately, and favorably inside AI-mediated discovery and decision experiences.

That includes:

- Getting cited as a source in AI-generated answers

- Being named as a recommended option in category and comparison prompts

- Having your product facts represented correctly (pricing model, integrations, positioning, pros and cons)

- Being selectable when agents move from research to action, especially in shopping and embedded commerce flows

If you want a clean mental model, think of AI visibility as three stacked outcomes:

- Inclusion: The model surfaces your brand at all.

- Accuracy: The model describes you correctly, consistently.

- Preference: The model recommends you, or routes users toward you, when alternatives exist.

Traditional SEO mostly focuses on ranking position. AI visibility fights for answer composition and recommendation probability.

And yes, this already matters. Google’s AI features are becoming more common, and third-party research shows AI Overviews changing click behavior in a way that can blunt traditional organic demand capture.

Why this is happening now

Two shifts are colliding.

First, search is turning into an answer engine. Pew’s analysis of Google usage in March 2025 found users were less likely to click through to other websites when an AI summary appeared. Even if your rankings don’t change, your traffic can, because the interface is doing more of the job on the SERP.

Second, “discovery” is spilling outside Google. Chat-based search experiences are increasingly normal, and platforms are explicitly positioning themselves as gateways to web sources and actions. OpenAI’s own product messaging emphasizes that ChatGPT search blends a conversational interface with links to web sources. Microsoft positions Copilot Search in Bing around transparency and prominent citations to sources. Perplexity describes its workflow as real-time web search plus summarized answers with citations.

So the same question your prospects used to type into Google is now being asked in half a dozen places, each with its own sourcing behavior.

Which means you need visibility systems, not single-channel hacks.

Key distinctions and clarifications

AI visibility vs traditional SEO

Here’s where teams get tripped up: AI visibility is built on SEO fundamentals, but it isn’t measured the same way.

Google’s own documentation on AI features makes the point that the best practices for SEO remain relevant for AI experiences, and then adds new guidance around technical requirements, measurement, and controls. So if your site is slow, thin, or untrustworthy, AI visibility will not save you.

The difference is what you optimize for. SEO has historically been about ranking pages. AI visibility is about producing content, entities, and validation that an AI system is willing to synthesize and cite.

Here’s an example:

A “What is SOC 2?” explainer might have ranked for years and driven top-of-funnel traffic. In AI Overviews, that same query can be satisfied without a click. Success metrics change from “rank #2” to “be one of the cited sources” and “be the security vendor named when the user asks what to buy next.”

AI visibility vs GEO, AEO, LLMO

Let’s be honest: most of these acronyms are marketers trying to name the same anxiety.

What’s useful is the underlying idea: optimizing for answer engines and LLM-mediated discovery is not identical to optimizing for classic SERPs. Aleyda Solis has mapped differences between traditional SEO and what she calls GEO, including how results are presented and what KPIs you track.

In this guide, we’ll use AI visibility as the umbrella term because it covers more than “ranking in an AI answer.” It also includes brand representation, third-party validation, and the commerce layer that’s starting to form as agents move from research into transactions.

AI visibility vs PR

PR used to be “awareness” for most performance teams. AI makes PR operational.

AI systems like to validate. They pull from multiple sources, and they favor consistent signals from across the web. That means earned media, credible reviews, and authoritative mentions can stop being “brand nice to have” and start being the evidence an answer engine leans on.

A core benefit of PR has shifted toward the ecosystem that trains and feeds the summaries prospects will trust.

AI visibility for B2B vs ecommerce

If you sell B2B SaaS, AI visibility tends to show up as category recommendations, vendor comparisons, and “what should I do” workflows.

If you’re ecommerce, AI visibility is quickly becoming “can an agent understand and transact your catalog.” OpenAI is actively pushing deeper shopping research experiences for product comparisons. And with the Agentic Commerce Protocol, they’re making embedded purchasing flows possible inside ChatGPT.

Different platforms. Same principle: become the obvious, verifiable choice.

How all goals & strategies compare

| Dimension | Traditional SEO | AI visibility | Digital PR |

|---|---|---|---|

| Primary win | Rank and earn the click | Be cited and recommended | Earn trusted mentions |

| Main platforms | Google SERPs | AI Overviews, Chat search, copilots | Media, newsletters, communities |

| What’s “indexed” | Pages | Pages plus entities plus validation | Stories, citations, reputation |

| Core unit | Page and query | Answer chunk and entity | Mention and narrative |

| Typical KPI | Clicks, rankings | Citation rate, share of answers | Mentions, referral, authority |

| Common pitfalls | Rank but no conversion | Wrong or missing representation | Awareness with no compounding |

| Best leverage | Technical + content | Entity + trust + structure | Authority and third party proof |

| Timeline | 3 to 9 months | 2 to 12 months | 2 to 12 months |

The 5 pillars of AI visibility

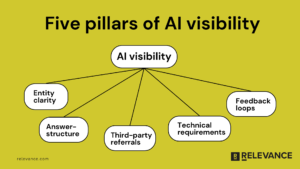

AI visibility looks complicated until you break it into pillars. When we run audits, almost every gap maps cleanly to one of these.

1) Entity clarity and trust signals

AI systems are trying to resolve “what is this thing” before they decide “should I mention it.”

Your job is to make your brand an easy entity to understand and verify. That means consistent naming, consistent positioning, and a clear relationship between brand, product, category, and use case across your site and trusted third-party sources.

In practice, teams miss this in boring ways:

- A product name changes across pricing pages, docs, and press.

- The “who it’s for” statement is vague, so the model defaults to a competitor’s narrative.

- Integrations and capabilities are buried in PDFs or gated pages.

Trust is the other half. Google’s systems have long emphasized quality and “Search Essentials,” and their guidance on AI features points site owners back to those fundamentals. AI visibility is not a shortcut around credibility. If anything, it punishes ambiguity because answers need confidence.

2) Answer-ready content architecture

A lot of teams still write like they’re trying to impress Google’s crawler.

AI systems behave more like readers skimming for extractable meaning. They want crisp definitions, structured comparisons, and paragraphs that stand on their own. Michael King at iPullRank has described AI Overviews as pulling highly relevant passages, not “ranking whole pages” in the traditional sense.

So yes, you still need long-form authority. But you also need “chunkable” sections that can be lifted into an answer without losing context.

A simple pattern that works: define, explain, prove, qualify.

- Define the concept in one tight paragraph.

- Explain how it works with one concrete example.

- Prove it with data or a credible reference.

- Qualify it with when it does not apply.

That structure shows up extremely well in AI summaries because it maps to how answers are composed.

3) Third-party validation

If you want to be recommended, you need the web to agree you exist.

Perplexity explicitly describes pulling from top-tier web sources and summarizing them with citations. Microsoft describes Copilot Search as prominently citing sources so users can validate. Those cues tell you the same thing: being cited is upstream of being trusted.

Validation comes from:

- Earned media and analyst coverage

- High quality reviews and category pages (think G2 for B2B, major retailers for ecommerce)

- Communities where real users discuss outcomes

- Partner ecosystems that mention you with context, not just a logo

The mistake is treating this as simply “link building.” Backlinks can help with authority. While AI visibility benefits from backlinks as a signal of authority, it is also about semantic confirmation: multiple independent sources describing you in the same way.

4) Structured data and machine readability

This is the least sexy pillar and the one that quietly separates teams who show up from teams who don’t.

AI systems and modern search features rely on being able to parse what your content is. Google’s guidance on AI features includes technical requirements and measurement considerations, which is a polite way of saying “if we can’t reliably understand your pages, don’t expect to be featured.”

At minimum, most teams need:

- Clean indexation and crawl paths

- Proper canonicalization

- Schema markup for organization, product, FAQs where appropriate

- A fast, accessible experience that doesn’t hide key info behind scripts or gated flows

You’re not doing schema because it’s trendy. You’re doing it because structured facts travel better than prose.

5) Measurement and feedback loops

If you can’t measure progress, this becomes a weird side quest.

And measurement is genuinely messy right now. For example, reporting for AI platforms is still evolving. Google has been rolling AI experiences into existing Search Console reporting rather than always breaking them out cleanly, which makes “did AI Overviews help or hurt us” hard to answer with one chart.

So you need your own feedback loop: prompt testing, citation tracking, and share of answers for your highest value intents.

The teams that win AI visibility are the teams that treat it like paid media experimentation: define a baseline, run tests, track deltas, iterate.

Building your AI visibility strategy

The fastest way to waste time here is to treat AI visibility as “we should do everything.”

Instead, make a few strategic calls up front.

Decision 1: Which AI platforms actually matter for your audiences?

If you sell to developers, Perplexity and GitHub adjacent behavior may matter more.

If you sell to SMB operators, Google AI Overviews and “best tool for X” prompts in ChatGPT-style interfaces will show up constantly.

If you’re ecommerce, shopping agents and embedded commerce flows are the obvious frontier. OpenAI is explicitly investing in shopping research experiences, and ACP makes transaction flows inside ChatGPT a real roadmap item, not science fiction.

Pick your top two platforms, then optimize outward.

Decision 2: Which intents are worth fighting for?

Not every query is worth chasing.

We usually bucket intents into:

- Problem education (top of funnel)

- Vendor evaluation (mid of funnel)

- Purchase and setup (bottom of funnel)

- Post-purchase support (retention and expansion)

AI visibility tends to be most valuable for informational queries when problems and potential solutions are initially being researched. It also plays a big role when searchers are comparing vendors or products and looking for real recommendations to make decisions.

Decision 3: Are you optimizing for inclusion, accuracy, or preference first?

If you’re not showing up at all, preference is irrelevant.

If you show up but are described incorrectly, fix accuracy before you chase more mentions.

Only once inclusion and accuracy are stable do you start engineering preference: comparisons, category narratives, “when to choose us” proof points, and third-party confirmation.

Decision 4: What is your source of truth?

AI answers are only as good as the sources they can cite.

If your content is generic, you’re interchangeable. That’s where original research, unique data, and first-hand expert content become your moat.

SparkToro’s work on zero-click behavior is a good reminder that influence can happen without a visit. Which means your “source of truth” needs to be compelling enough that even a summarized version still carries your perspective.

AI visibility tactics that drive results

This is the part everyone wants: what to do on Monday.

Here are the plays we’ve seen compound, especially for teams that don’t have endless content budgets.

1. Build an “AI-ready” category page, not just a homepage

Most sites bury their category positioning in brand fluff. AI systems do better when your category definition is explicit.

A strong category page includes:

- A plain language definition of the category

- The core jobs to be done

- A short “how to choose” framework

- Clear differentiation with evidence

- A compact comparison section that doesn’t dodge tradeoffs

If you do this well, you give answer engines a clean source for both educational and evaluative prompts.

2. Create comparison content that is genuinely fair

The easiest AI visibility win for B2B is showing up in “X vs Y” prompts and “best tool for” questions.

But the lazy version of this tactic is a page that says “we’re better than everyone” with no receipts. That tends to get ignored, or worse, it gets summarized as “vendor claims they are best.”

The better version looks like this:

- You explicitly say who each option is best for.

- You list the real tradeoffs.

- You include objective criteria: pricing model, implementation effort, integrations, compliance posture.

- You include third-party proof: reviews, case studies, benchmarks.

When you write with that level of honesty, the AI summary often reads like a recommendation, because it trusts the structure.

3. Use digital PR and original data to earn citations

Want to be cited more? Give the internet something to cite.

The playbook is not “send more press releases.” It’s building assets that journalists, bloggers, and analysts actually need:

- Industry benchmarks

- Usage trend reports

- Cost calculators

- Research on outcomes

Ahrefs built years of authority by publishing research about SEO that people reference constantly. Semrush is doing similar things with AI Overviews studies and commentary. Those are citation engines.

You don’t need a massive budget to start. A small B2B SaaS can run a survey, publish a clean report, and pitch the 20 writers who cover the space. The goal is not one spike. The goal is durable references that show up again and again in AI answers.

4. Make sure your brand facts line up everywhere

AI systems get confused when your facts disagree across sources.

So do this like an ops project:

- Align your About copy, product naming, and positioning

- Standardize pricing language and packaging names

- Make integration lists consistent across docs and landing pages

- Clean up outdated partner pages that misstate what you do

- Ensure your leadership bios, company descriptions, and category labels match everywhere

Boring work. High leverage.

5. Optimize for “answer chunks” inside your best-performing content

This is where teams can move fast without rewriting their entire blog.

Take your top 20 organic pages and retrofit them for answer extraction:

- Add a crisp definition near the top

- Add an FAQ section with real questions buyers ask

- Add a “common mistakes” section

- Add a “how to choose” mini framework

- Add one concrete example with numbers

Google’s guidance on using generative AI content also reinforces a core point: scaled content without value is risky. So resist the temptation to flood the zone with low-effort AI pages. You’ll get more AI visibility by upgrading fewer pieces into true reference assets.

6. Ecommerce: get serious about feeds, structured attributes, and agent readiness

If you sell products, AI visibility is quickly becoming feed visibility.

OpenAI’s shopping research experience is built for deeper comparisons and constraints, which means your product data needs to be precise and consistent across sources.

Then there’s the next layer: agentic checkout. OpenAI’s Agentic Commerce documentation frames ACP as a standard for connecting buyers, agents, and businesses to complete purchases. That’s not “someday.” Stripe is already talking about powering Instant Checkout in ChatGPT as part of this ecosystem.

Even if you’re not implementing ACP tomorrow, the direction is clear: structured commerce data and clean inventories are becoming visibility assets.

7. Run prompt testing like paid media creative testing

Most teams approach AI visibility like SEO circa 2012: publish, wait, hope.

A better approach is to define a prompt set and test weekly.

Here’s a simple set that covers most businesses:

- “Best [category] for [ICP]”

- “[Category] vs [alternative approach]”

- “How do I [job to be done]”

- “[Your brand] pricing, pros, cons”

- “[Competitor] alternatives”

Track whether you appear, whether you are cited, and whether the description matches what you’d want a sales rep to say.

That becomes your feedback loop for what to fix next.

Measuring AI visibility and proving ROI

Let’s say the quiet part out loud: attribution is not clean here yet.

Pew can tell us that AI summaries reduce click behavior on Google. Ahrefs can tell us AI Overviews are expanding in prevalence. But your CFO is still going to ask, “Did this drive revenue?”

So you need a measurement stack that blends direct and directional signals.

Start with leading indicators:

- Share of answers for your category prompts

- Citation rate and source inclusion for your priority pages

- Brand sentiment and accuracy inside answers

- Referral traffic from AI platforms where available

Then connect to business outcomes:

- Pipeline influenced by organic sessions that originate from AI citations

- Sales cycle acceleration for accounts that engage with your “reference assets”

- Conversion lift on pages that become commonly cited

Also, be aware of reporting caveats. Coverage of Search Console reporting changes suggests AI experiences can be rolled into overall performance data rather than neatly separated, so you may need external tracking and prompt based measurement to isolate changes.

When you report this to leadership, don’t lead with “AI is scary.” Lead with market reality:

- Clicks are becoming less reliable as the only indicator of influence.

- The new win condition is becoming the cited, trusted source.

- And you can measure that with a clear operating cadence.

Your AI visibility implementation roadmap

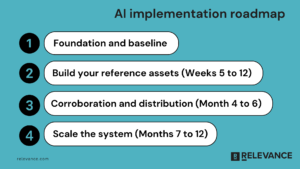

You can do this with a lean team, but you need a sequence. The teams that try to “optimize everything” burn out and ship nothing that compounds.

Phase 1: Foundation and baseline (Weeks 1 to 4)

Start by defining your AI visibility targets: the 20 to 50 prompts that map to revenue intent.

Then run a baseline audit across your two priority platforms. Capture inclusion, accuracy, and preference.

In parallel, clean up the basics: indexation, canonical issues, broken schema, outdated product pages, inconsistent messaging. This is the unglamorous work that makes everything else stick.

Deliverable at the end of Phase 1 is a one-page AI visibility scorecard that says: here’s where we show up, here’s where we’re missing, here’s where we’re misrepresented.

Phase 2: Build your reference assets (Weeks 5 to 12)

Pick three assets that you can realistically ship:

- A category definition page that doubles as an evaluation guide

- Two comparison pages that are fair, detailed, and evidence-based

- A retrofit of your top five organic pages into answer-ready “chunks”

Don’t try to publish 30 new blog posts. Publish fewer, better assets that can become the sources answers pull from.

At the end of this phase, you should see citation inclusion start to move, even if traffic does not spike.

Phase 3: Validation and distribution (Months 4 to 6)

Now you make the web agree with you.

Launch a digital PR push around one data asset or benchmark. Build partner pages and integrations content that creates third-party confirmation. Push for credible reviews and community discussions that reflect real outcomes.

This is where AI visibility starts compounding, because you’re creating multiple independent sources that reinforce the same narrative.

Phase 4: Scale the system (Months 7 to 12)

By now, you should know what prompts you win and which you don’t.

Scale looks like:

- Expanding your prompt set and tracking cadence

- Creating a repeatable quarterly research or benchmark program

- Adding more comparison content for your highest conversion competitors

- Investing in structured data and feeds if you’re product-heavy

At the end of 12 months, AI visibility should feel like a durable part of your organic growth engine, not an experiment that disappears when priorities shift.

What our research team is seeing today

AI visibility work in late 2025 has one theme: the ceiling is rising.

AI Overviews are expanding in prevalence, and credible studies keep reinforcing what practitioners feel in their dashboards: top funnel clicks are harder to count on. When leadership asks why traffic is flat even though rankings look stable, this is often the missing context.

We’re also seeing a growing separation between brands that publish “content” and brands that publish sources. The pages that get pulled into AI answers tend to have clear definitions, concrete qualifiers, and explicit structure. Vague thought leadership does not travel well inside summaries.

The third shift is commerce. OpenAI is pushing both shopping research and agentic commerce standards, which signals a move from “answer engines” into “action engines.” If you sell products and your data is messy, AI visibility is going to feel like a tax. If your data is clean, it becomes a channel.

One important caveat: measurement still lags behavior. Reporting for AI-driven impressions and clicks is not always cleanly segmented, which makes prompt-based tracking and controlled experiments more valuable than ever.

What top experts are saying

Most serious practitioners are converging on a surprisingly grounded take: the fundamentals still matter, but the interface changes the playbook.

Google’s own Search Central documentation on AI features tells site owners that SEO best practices remain relevant, while also acknowledging new technical and measurement considerations for AI experiences. In other words, you can’t hack your way into AI Overviews with gimmicks. You need quality, clarity, and crawlable structure.

Aleyda Solis has been explicit about the overlap and the differences between classic SEO and optimization for AI search experiences, including changes in user behavior and KPIs. That lines up with what we see in practice: rankings alone are an incomplete scorecard.

Rand Fishkin’s work has long highlighted the rise of zero-click behavior, and his 2024 zero-click study quantifies how many searches end without an open web click. The AI era makes that trend more obvious, and it’s why brand-driven demand and memorability are becoming inseparable from “SEO strategy.”

On the technical side, Michael King at iPullRank has emphasized that AI Overviews can pull specific passages and relevance signals rather than rewarding generic pages, which pushes teams toward structured, extractable content and stronger information architecture.

Finally, the platforms themselves are framing this as a transparency plus sourcing game. Microsoft positions Copilot Search around prominent citations so users can validate. Perplexity describes real-time search plus summarized answers that reference sources. If you want to be “the answer,” you need to be “the source.”

Common mistakes that hurt AI visibility

Most teams don’t fail because they didn’t do enough. They don’t see success because they did the wrong kind of work.

The first mistake is chasing volume. Google’s guidance on generative AI content is clear that scaled content without value can violate spam policies. If your plan is “publish 200 AI posts,” you’re building a liability.

Another common miss is ignoring the facts layer. If your pricing model, positioning, and feature set are inconsistent across your site and third-party sources, AI systems will either skip you or describe you incorrectly. Fixing this feels like ops work, but it’s often the fastest path to improved accuracy.

A third mistake is treating PR and reviews as “brand projects.” In an AI-mediated world, third-party validation is fuel for citations. If you’re invisible across credible sources, you’re asking an answer engine to take your word for it.

Finally, teams measure the wrong thing. If you only report rankings and organic sessions, you’ll miss the real shift: influence can happen inside summaries with fewer clicks. You need share of answers and citation inclusion as first-class metrics.

Who should and shouldn’t prioritize AI visibility

You should prioritize AI visibility if your buyers research online, compare vendors, and ask detailed questions before they buy. That’s most B2B SaaS, most consumer consideration purchases, and basically any category where “best tool for X” drives meaningful revenue.

You should also prioritize it if you’re seeing organic traffic flatten while brand interest stays steady. That’s often a sign that discovery is happening, but clicks are getting absorbed by on SERP answers.

On the flip side, if you need pipeline next month and you have no organic foundation, AI visibility should not be your primary bet. You’re better off using paid and outbound to create demand while you build the assets and validation that make AI visibility possible.

The honest framing: AI visibility is a compounding advantage. It can be a growth engine, but it rewards teams that can stick with a system for months, not teams looking for a two-week hack.

Final thoughts

AI visibility is not a replacement for SEO. It’s the next layer of it.

The win is no longer just ranking. It’s being included, being described correctly, and being recommended when buyers ask the questions that actually lead to revenue. That happens when you combine entity clarity, answer-ready content, third-party validation, structured data, and a measurement loop you can run every week.

Start small. Pick your platfroms. Define your prompt set. Ship reference assets that deserve to be cited. Then build validation so the web agrees with you.

That’s how you become the source the answer is made from.

How we research articles at Relevance

Relevance is a growth marketing, SEO, and PR agency. We write from the operator’s seat: what we see when we audit sites, ship content systems, run distribution, and then deal with the reporting questions that follow. Our goal is to translate messy platform changes into a plan a lean team can actually execute.

We supplement that hands on experience by reviewing primary documentation from platforms and credible third party research. For this article, we focused on sources that describe how AI search experiences work, what they cite, and what measurable impact they’re having on user behavior and clicks.

Frequently Asked Questions

As AI answers replace more clicks, the goal shifts from “ranking a page” to becoming the source those answers are built from.

What does AI visibility mean?

AI visibility is your brand’s ability to show up accurately and favorably inside AI-mediated discovery and decision experiences. That includes being cited in AI-generated answers, being named in category and comparison prompts, and having key product facts (like positioning, integrations, and pros/cons) represented correctly. A simple way to think about it is three stacked outcomes: inclusion (you show up), accuracy (you’re described correctly), and preference (you’re recommended when alternatives exist).

How is AI visibility different from traditional SEO?

AI visibility is built on SEO fundamentals, but it’s measured differently. Traditional SEO is mostly about ranking pages and earning the click. AI visibility is about answer composition: creating content, entities, and validation that an AI system is willing to synthesize and cite. In AI answer experiences, success can look less like “rank #2” and more like “be one of the cited sources” and “be the vendor named when the user asks what to buy next.”

How to check AI visibility?

Start with a defined prompt set tied to revenue intent, then track three outcomes: inclusion, accuracy, and preference. Run weekly prompt testing (for example: “Best [category] for [ICP],” “[Your brand] pricing, pros, cons,” “X vs Y,” and “alternatives”) and log whether you appear, whether you’re cited, and whether the description matches your intended positioning. Use leading indicators like citation rate, share of answers for priority prompts, and accuracy/sentiment inside answers, then connect directional signals to outcomes like influenced pipeline and conversion lift on commonly cited pages.

What are the key pillars that drive AI visibility?

Most AI visibility gaps map to five pillars: (1) entity clarity and trust signals (consistent naming, positioning, and credibility), (2) answer-ready content architecture (clear, chunkable sections with definitions and qualifiers), (3) third-party validation (credible mentions, reviews, and community discussion that confirm your narrative), (4) structured data and machine readability (clean crawl paths, canonicals, schema, and accessible key info), and (5) measurement and feedback loops (prompt testing, citation tracking, and iterative fixes).

What SEO strategies are recommended for AI visibility?

Keep SEO fundamentals strong, then shift execution toward what answer engines can extract and trust. Practical moves include building an AI-ready category page with a plain-language definition, jobs-to-be-done, and a “how to choose” framework; creating genuinely fair comparison pages with real tradeoffs and objective criteria; retrofitting top organic pages with crisp definitions, FAQs, “common mistakes,” and concrete examples; and aligning brand facts across your site and third-party sources so models don’t get conflicting signals. Structured data helps key facts travel better than prose.

What’s Generative Engine Optimization and how does it work?

Generative Engine Optimization (often shortened to GEO) is one of several labels used for optimizing visibility in AI answer experiences rather than classic SERPs. The useful idea is that AI-mediated results change what you optimize for and which KPIs matter, even though search fundamentals still apply. In practice, GEO overlaps with AI visibility work like producing answer-ready content, strengthening entity clarity and trust, earning third-party validation, and improving machine readability so your information is easier to synthesize and cite.

What’s the difference between awareness and visibility?

Awareness is being known; visibility in AI-mediated experiences is being included and represented in the answers people rely on while researching and deciding. PR has traditionally been treated as “awareness,” but in AI discovery it becomes operational because AI systems validate claims across multiple sources. That means earned media, authoritative mentions, and credible reviews can function as evidence that makes your brand easier to cite and trust—moving visibility from a brand nice-to-have into a compounding advantage.

Why is AI visibility becoming important now?

Two shifts are colliding: search is turning into an answer engine, and discovery is spilling outside Google into chat-based experiences and copilots. When AI summaries satisfy intent on the results page, click behavior can change even if rankings don’t. At the same time, the same question is now asked across multiple platforms with different sourcing behaviors. That raises the stakes for being a clear, verifiable entity with content and third-party signals that can be synthesized, cited, and trusted.