If you are watching your organic clicks flatten while your CEO asks, “Why are we not showing up in ChatGPT answers?” you are not imagining it. We are in a world where two systems shape discovery at the same time: classic search rankings and generative answer engines that synthesize, cite and sometimes skip the click.

Here is the uncomfortable truth we have been telling clients all year. AI does not “rank” your content the way Google did in 2016. It tries to decide whether your information is safe to repeat, easy to verify and likely to satisfy the query. That is a different game, and it is why some brands with mediocre backlink profiles keep getting cited, while others with strong SEO foundations get ignored.

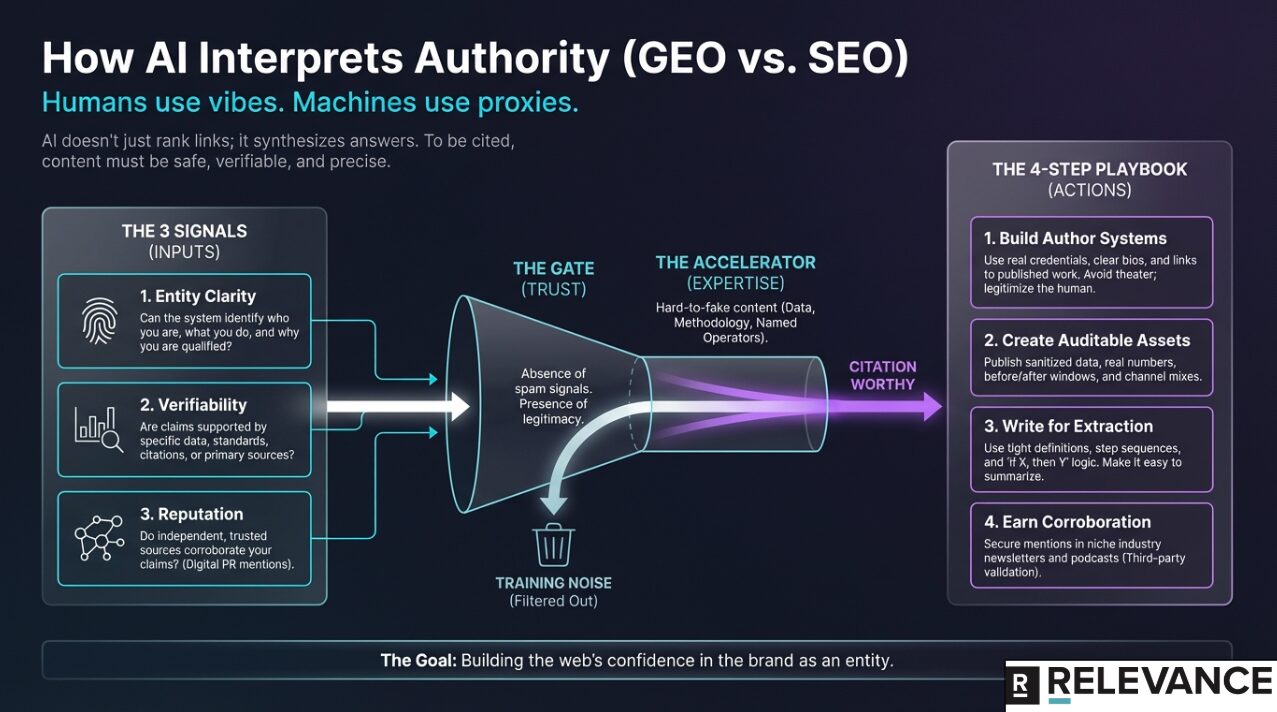

What “authority” looks like to a machine

Humans use vibes. Machines use proxies.

In Google’s public documentation about search quality rating, raters evaluate pages using E-E-A-T (experience, expertise, authoritativeness and trust) especially for “Your Money or Your Life” topics. Google also says those rater scores do not directly move your site up or down. They get used in aggregate to evaluate and improve systems.

Generative engines borrow the same spirit, even when they are not Google. When a model is about to answer “best ERP for manufacturers” or “how to treat dog diarrhea,” it has to make a call: can I repeat this without harming the user or embarrassing myself with something untrue?

So authority becomes less about “how many people linked to you” and more about “how many independent signals suggest you are the right entity to trust on this topic.”

Here are the three signals we see matter most in practice:

- Entity clarity: Can the system tell who you are, what you do and why you are qualified?

- Verifiability: Are your claims supported by specific data, standards, citations or primary sources?

- Reputation outside your site: Do other trusted places describe you consistently?

That last one is why digital PR is suddenly back on the menu, particularly for industries like healthcare where trust is non-negotiable. Not the spray-and-pray guest post stuff, but real mentions in places the model is comfortable repeating.

Trust is the gate, expertise is the accelerator

Marketers love to talk about expertise because it is flattering. Trust is the piece that actually gets you included.

Trust, in this context, is boring. It is the presence of basic legitimacy signals and the absence of “this feels scammy” signals. Google’s guidelines spend a lot of time on identifying who is responsible for the content, how transparent the site is and whether the page is untrustworthy or misleading.

In 2025, trust also includes not looking like scaled content spam. Google’s spam policies explicitly call out behaviors that can get pages ranked lower or removed from results. If you pumped out 300 “best X” pages with near-identical templates and zero original insight, you did not just create thin SEO content. You created training noise that answer engines learn to distrust.

Once you clear trust, expertise helps you win the citation. Expertise shows up when the content contains things that are hard to fake:

A real comparison table you built from hands-on testing. A quote from a named operator. A clear methodology. A specific benchmark. A screenshot of the workflow inside GA4 or HubSpot that proves you did the work.

How AI decides what to cite

Most teams assume the model just cites whoever ranks No. 1. That is not how it works in many answer experiences.

Modern systems often retrieve a set of documents, then synthesize an answer. Research on Generative Engine Optimization (GEO) frames this as optimizing for visibility inside generative responses and proposes methods to measure and improve it. In plain English, you are not only competing for a click. You are competing to be one of the sources the model pulls into the response.

That is also why “citation features” are becoming a product category. Anthropic, for example, launched a citations capability for Claude that grounds outputs in specific passages from provided documents. The broader market is pushing toward verifiable answers because hallucinations are a business risk, not a funny demo fail.

Which means your job is to make your best content easy to retrieve, easy to extract and easy to justify.

The playbook we use to make content “citation worthy”

When we run this as a project, we treat it like technical SEO meets PR meets editorial standards. The fastest wins usually come from making your authority legible, not from publishing more.

Four moves tend to work without needing a giant budget:

- Build an “About this author” system that does not feel like theater. Give every serious article an author page with real credentials, a clear bio, links to interviews, conference talks or published work and a way to contact the business. If you have subject matter experts, put them on the page and let them own claims.

- Turn your best claims into auditable assets. If you say “we cut CAC by 22 percent,” show the before and after window, the channel mix and what changed. If you cannot show it publicly, publish a sanitized version with numbers that still mean something.

- Write for extraction, not vibes. The answer engines love tight definitions, step sequences and “if X, then Y” logic. That does not mean robotic writing. It means you earn the right to be summarized.

- Earn third-party corroboration. The cheapest version is a founder podcast tour plus a handful of niche industry newsletters. The higher leverage version is one or two strong data stories a quarter pitched to outlets that actually have editorial standards.

If you want a quick gut-check, ask: “Would a cautious analyst cite this in a deck?” If the answer is no, a model is less likely to cite it too.

Here is the only list you need to kick this off in the next two weeks:

- Publish author pages for your top five traffic drivers.

- Add sources or primary data to your top 10 “money” articles.

- Build one original benchmark with a simple methodology.

- Pitch that benchmark to five credible industry publications.

Measuring progress when analytics does not cooperate

Right now, measurement is messy. Even some practitioners note there is no clean reporting in Search Console that isolates AI Overview impressions and clicks, so you end up triangulating with query sets and manual checks.

In practice, we track three things:

First, a fixed list of target queries and whether you appear as a citation across Google AI Overviews, Perplexity and ChatGPT-style search experiences.

Second, branded search lift. If more people search your name, you are teaching both humans and machines that you are a real option.

Third, assisted conversions from organic and direct in your attribution model. You are not doing this just to “win AI.” You are doing it because the same trust signals improve conversion rate once people land.

What to tell stakeholders

If leadership wants a single KPI, give them this: “We are building the web’s confidence in our brand as an entity.”

That sounds fluffy until you connect it to outcomes. Better entity confidence usually means higher quality leads from organic, lower sales friction because prospects have seen you referenced elsewhere and a content moat that is harder for copycats to replicate.

Also, you do not have to choose between SEO and GEO. The teams that win treat GEO as the new packaging layer on top of the same fundamentals: trustworthy content, technical accessibility and real reputation.

Methodology

The insights in this article come from Relevance’s direct work with growth-focused B2B and ecommerce companies. We’ve run the campaigns, analyzed the data and tracked results across channels. We supplement our firsthand experience by researching what other top practitioners are seeing and sharing. Every piece we publish represents significant effort in research, writing and editing. We verify data, pressure-test recommendations against what we are seeing, and refine until the advice is specific enough to actually act on.