If you’ve ever typed your category into Perplexity, Gemini or Google’s AI Overviews and felt your stomach drop because your competitor is the “recommended” answer, you already understand the job: you’re not just fighting for rankings anymore. You’re fighting for inclusion. Not “page one.” Not even “top three.” You’re fighting to be one of the sources the model decides to cite or synthesize.

That’s what we mean by AI Authority Engineering. It’s the repeatable work of making your brand the most extractable, defensible source in your niche so AI systems can confidently pull you into answers, citations and follow-up paths. And yes, it’s different from classic SEO because generative search systems use techniques like query fan-out to assemble responses from multiple sub-queries and sources.

Here’s the playbook we’ve been running with clients and on our own properties.

What AI authority engineering actually is

Traditional authority was mostly a ranking game: links, on-page relevance, technical hygiene. That stuff still matters.

But generative engines add a new filter: “Can I use this source in an answer without making myself look stupid?”

So AI authority engineering is about building three things at the same time:

- Entity clarity (who you are and what you’re “about”)

- Evidence density (why you’re credible on the topic)

- Retrieval friendliness (how easy it is for systems to quote, cite and stitch you into an answer)

If you want the simple mental model, use this:

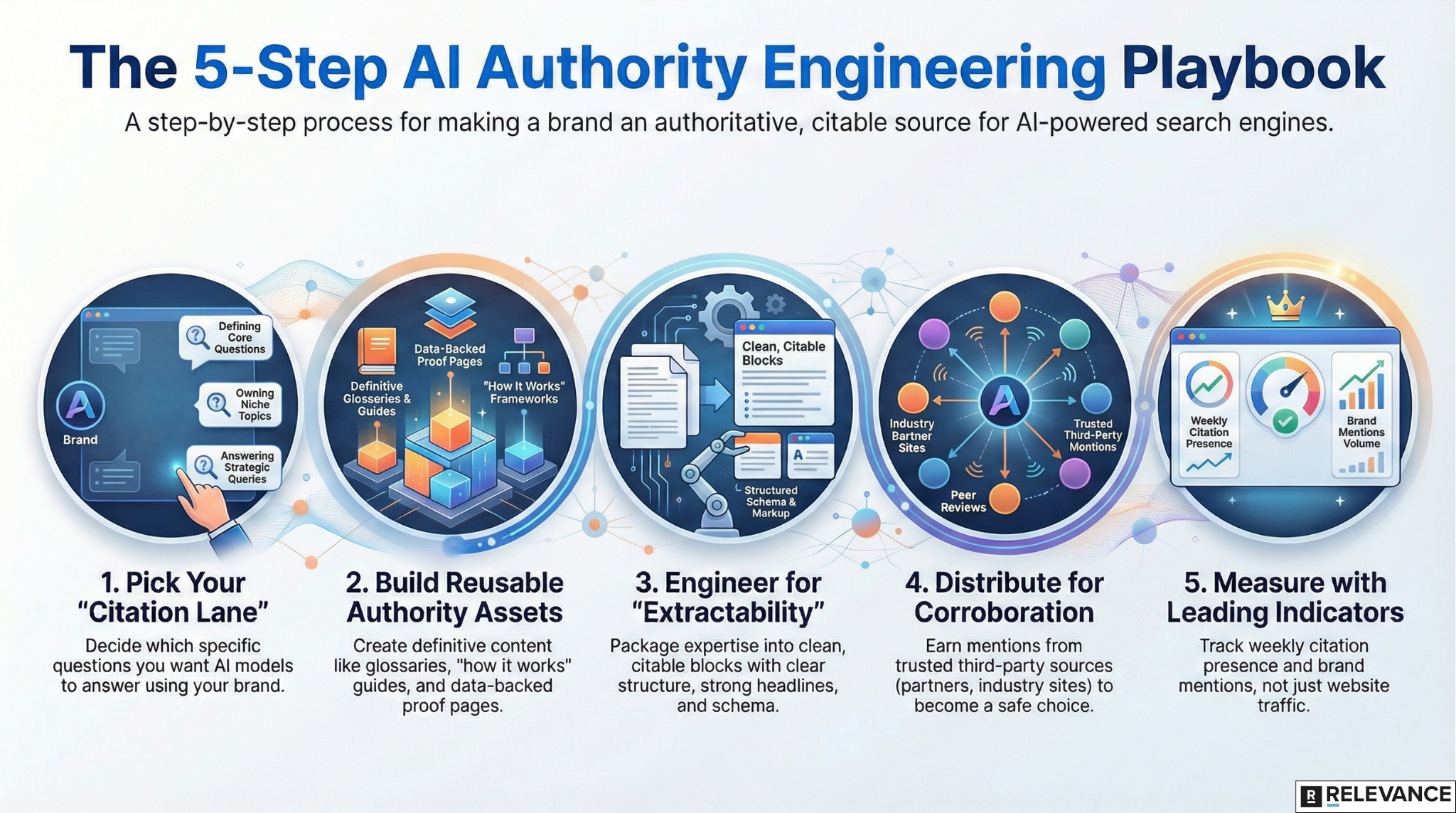

AI authority = Entity + Evidence + Extractability + Distribution + Feedback loop

Miss one, and you’ll feel it. Great content without distribution gets ignored. PR without extractability turns into “mentions” that never get cited. Schema without real evidence is just well-labeled fluff.

Step one: pick the “citation lane” you want to own

Most teams start by asking, “How do we get cited more?” Wrong starting point.

Start by deciding which questions you want models to answer with your brand attached. In practice, that’s usually a tight mix of “definition” queries, “how-to” queries and “best tool/vendor” queries.

Before you touch content, you need four inputs:

- Your highest-LTV product line or use case

- The top five objections Sales hears weekly

- The comparison you keep losing (brand X vs. you)

- The one stat your CEO repeats in every pitch

This is where we see teams waste quarters. They publish “thought leadership” in topics they can’t win because they don’t have proof, product differentiation or a real point of view.

Step two: build authority assets models can safely reuse

Generative engines are lazy in a specific way. They love sources that already look like a clean answer. When the system is assembling a response across fan-out subtopics, your job is to be the page that makes the stitching easy.

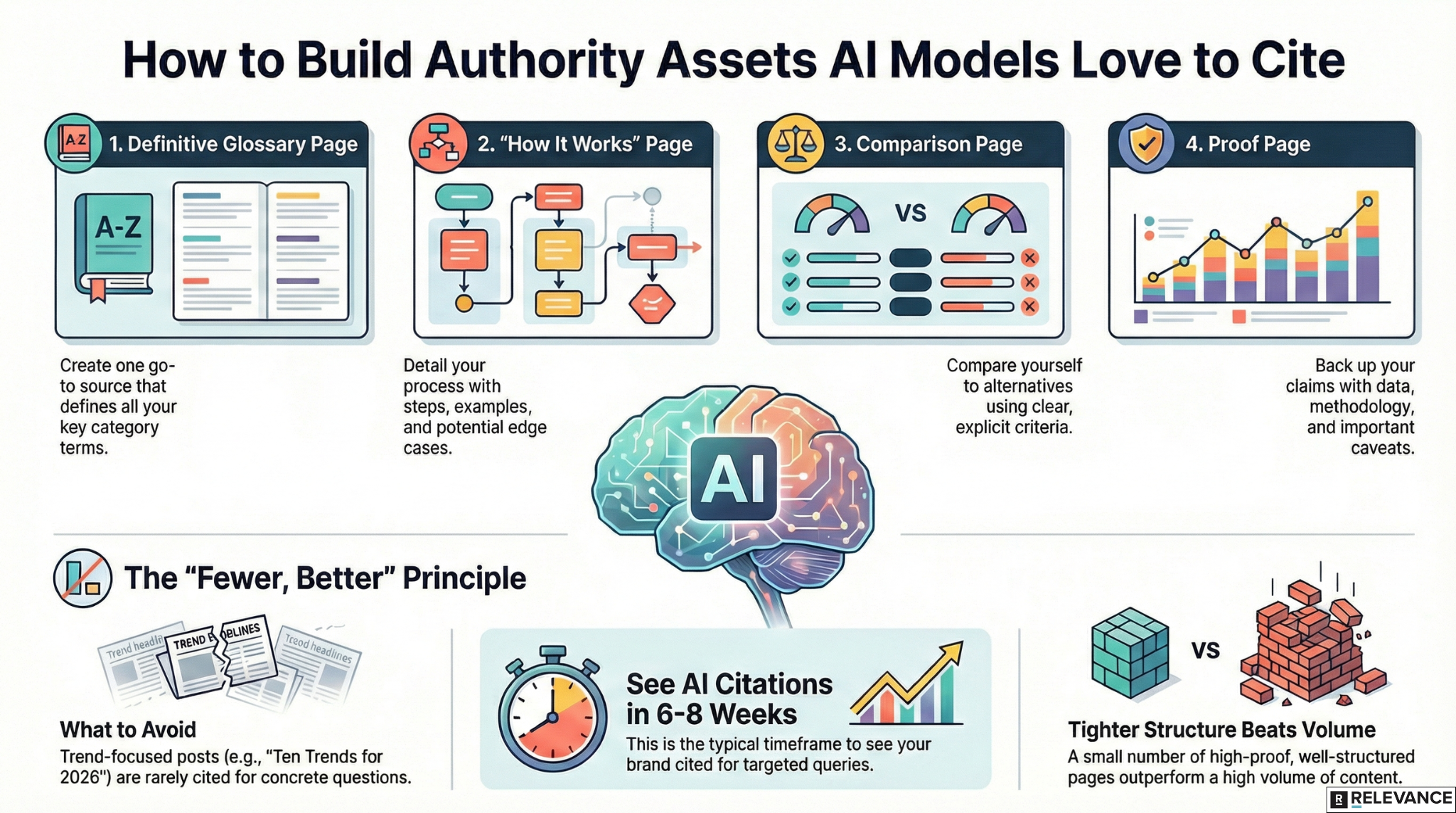

The asset types that consistently earn citations for us:

- One definitive glossary page for your category terms

- One “how it works” page with steps, edge cases and examples

- One comparison page (you vs. alternatives) with clear criteria

- One proof page with numbers, methodology and caveats

Notice what’s missing: “ten trends in 2026.” Trends posts can help distribution, but they’re rarely the canonical source an AI engine wants to cite when someone asks a concrete question.

Also, you do not need 50 pages to start. In a recent B2B SaaS engagement, we shipped eight “authority assets” in three weeks (two writers, one SME, one editor).

Within about six to eight weeks, we started seeing the brand show up in AI answer citations for the exact comparison and “how-to” queries we mapped. That timeline varies by niche, but the pattern is stable: fewer pages, more proof, tighter structure beats volume.

Step three: engineer extractability so your best ideas don’t get skipped

This is the unsexy part that moves the needle.

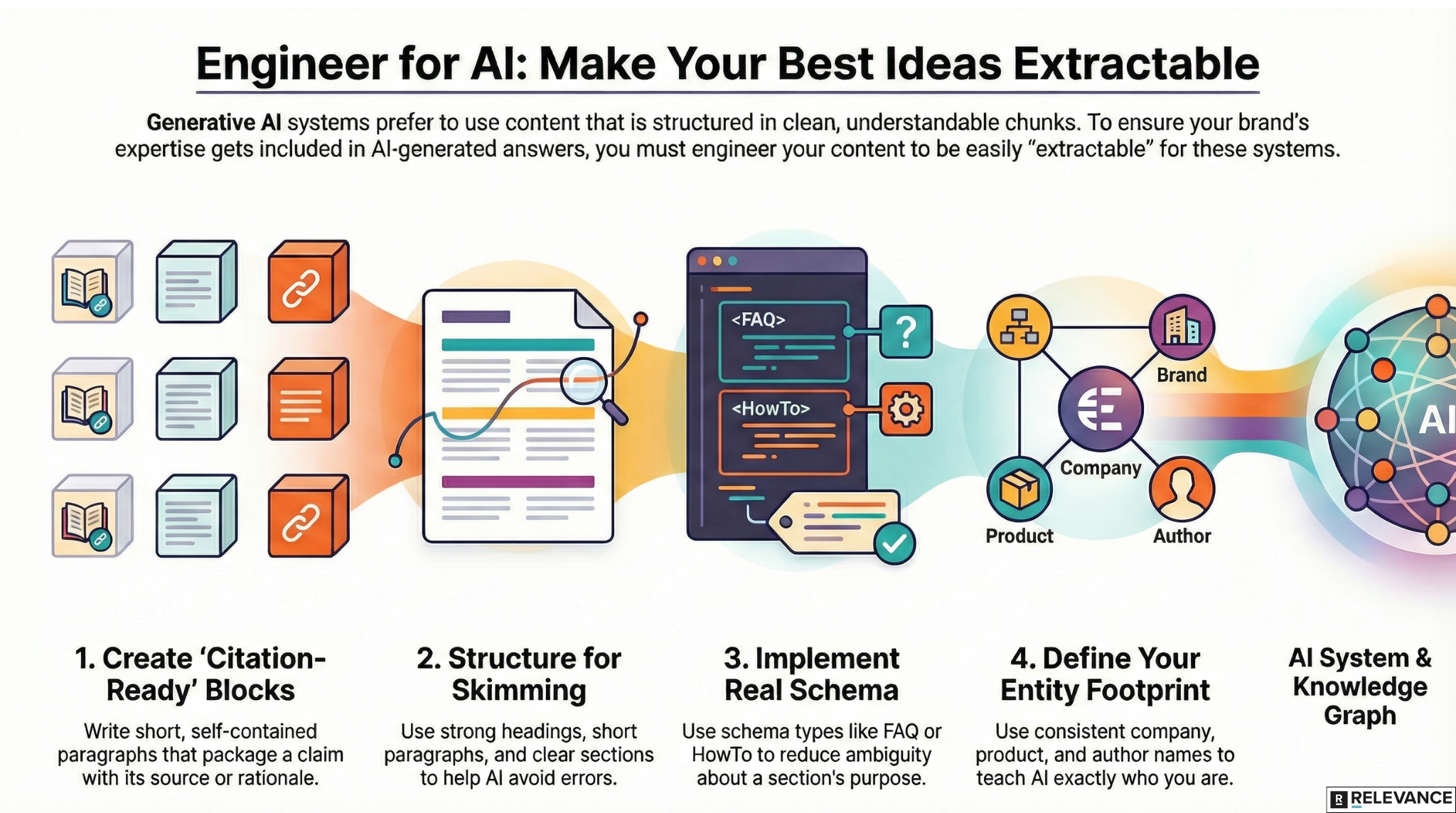

Generative systems want clean chunks: definitions, claims with support, lists that are actually lists, tables that are truly comparable. You’re essentially packaging your expertise for retrieval.

What we do almost every time:

- Write “citation-ready” blocks. Short paragraphs that contain a claim, the qualifier and the source or rationale. If you have original data, spell out the sample size and window right there, not buried in a PDF.

- Structure for skimming. Strong H2s that match user intent, short paragraphs, explicit “when this fails” sections. Models tend to reward pages that help them avoid hallucination risk, and that means your nuance becomes an asset, not a liability.

- Use schema where it’s real. FAQ, HowTo, Product, Organization, Person. Not because schema is magic, but because it reduces ambiguity about what a section is.

- Tighten your entity footprint. Consistent company name, consistent product names, consistent author bios, consistent About page language. You’re teaching systems what entity they’re looking at.

This matters even more as AI answers become the primary interface. Perplexity, for example, explicitly positions citations as a core transparency feature.

Step four: distribution that creates “boring, repeatable” corroboration

This is where most “AI optimization” advice gets sketchy. You don’t need hacks. You need corroboration across the web from sources that models already trust.

Think: industry associations, credible partner pages, podcasts with transcripts, conference talk recaps, reputable directories, analyst roundups, strong customer stories. The goal is not spammy mentions. It’s to create enough consistent third-party context that your brand becomes the safe choice to reference.

One underused move for B2B: publish a public “knowledge hub” that mirrors what your sales team sends in follow-ups (security answers, implementation timelines, pricing philosophy, integration docs). We’ve seen this reduce sales cycle friction and increase the number of long-tail questions you can credibly “own.”

Also, if you operate in Microsoft’s ecosystem, pay attention to how knowledge grounding works. Even Microsoft’s own Copilot Studio documentation frames public website knowledge sources as grounded via Bing search. That should change how you think about “documentation as marketing.”

Step five: measure authority like a growth team, not like an SEO team

If you wait for GA4 to show a clean “AI referrals” line item, you’ll be waiting a while. We treat measurement as a mix of direct checks and leading indicators.

The leading indicators we track weekly:

- Citation presence for your mapped queries

- Brand mentions co-occurring with category terms

- Search Console lifts on top-of-funnel question pages

- Demo and sales-assisted mentions of “saw you in AI”

Yes, you still care about rankings.

But in this era, visibility is not the same as traffic. And the reputational value of being the cited source can show up in the pipeline before it shows up neatly in last-click attribution.

The tradeoffs nobody tells you about

Two things to be honest about.

First, the legal and publisher landscape is messy. There’s active tension between AI answer products and content owners, including lawsuits involving Perplexity and major publishers. That means the rules of what gets crawled, cited and summarized will keep shifting.

Second, AI answers can be wrong and it’s not a theoretical risk. Google’s AI Overviews have drawn criticism for misinformation in sensitive categories, and Google has removed some summaries after scrutiny. Which means your job is to become the “safe” source by being specific, qualified and evidence-backed, not by being loud.

Where to start on Monday if you’re overloaded

If you’re running lean, do this:

Pick one high-intent use case. Build four authority assets. Add extractability improvements. Then run one distribution sprint (two to four weeks) to earn corroboration. Measure citations weekly, update pages monthly.

That’s it. AI Authority Engineering works when it’s treated like a system, not a stunt.

Methodology

The insights in this article come from Relevance’s direct work with growth-focused B2B and ecommerce companies. We’ve run the campaigns, analyzed the data and tracked results across channels. We supplement our firsthand experience by researching what other top practitioners are seeing and sharing. Every piece we publish represents significant effort in research, writing and editing. We verify data, pressure-test recommendations against what we are seeing, and refine until the advice is specific enough to actually act on.